ASLA - the challenge

Welcome to the ASLA challenge where you build small, two-dimensional organic molecules. At each level of the challenge, you are given a fixed set of atoms and must construct the entire range of most stable molecular compounds one may build from that.

While puzzling with how to build stable molecular compounds, you will be assisted by an ASLA agent, which is an artificial neural network trained to complete any unfinished atomistic structure in to the most stable compound possible. Read more about the background of the ASLA project and the technology behind an ASLA agent below.

The challenge has two times three levels that unlock sequentially as you complete them one by one. Click on "Level 1" or "Level 4" to start.

Background

ASLA = Atomic Structure Learning Algorithm is part of a long term research project at Aarhus University funded by the VILLUM Foundation and the Danish National Research Foundation through the Center of Excellence "InterCat". The project aims at developing methods for automated design of matter.

Imagine some artificial intelligence resource, an agent, that given an incomplete molecular structure may indicate where atoms should be added in order to obtain stable molecules. ASLA is a method for getting such an agent, and this web site is an illustration of how intelligent (or not) such agents may become.

The ASLA method for training agents is inspired by the AlphaZero algorithm that has led to the situation that computers can now beat the human World Champions of classical board games such as Chess and Go. A striking feature of AlphaZero is that the computer learns the game by playing against itself. Similarly, ASLA is formulated such that the agents learn chemistry without input from humans, but only via building structures for which another computer program provides a measure of the stability (the formation energy). The principle used is called reinforcement learning. To save computer time, the agents on this web site have, however, also benefitted from a database of solutions and were thus partially trained via supervised learning. See more on the ASLA method in this freely available scientific paper: J. Chem. Phys. 151, 054111 (2019) and in the following section.

The technology

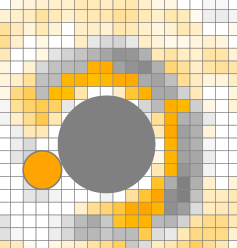

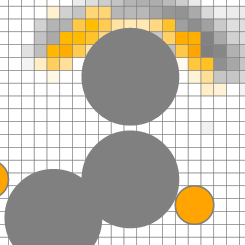

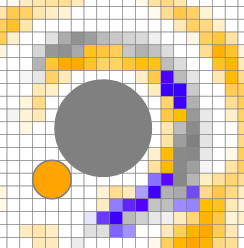

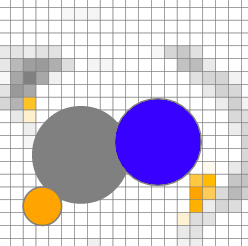

ASLA builds on a convolutional neural network - the ASLA agent. The input to the network is an image representation of an unfinished molecular compound. The atomic positions are initially given by single pixels that light up. The input image has one layer for each atom type. A first network layer (not shown) broadens them to neighboring pixels with a Gaussian type intensity distribution, see J. Chem. Phys. 153, 044107 (2020). After three hidden layers the network outputs an image of so-called Q-values - again one layer for each atom type. The Q-value at a given pixel indicates the expected stability of a final molecule if the next atom is placed at that pixel. The Q-values are shown as raster plots, where darker intensity reflects higher expected stability.

The stability of a molecular compound structure can be judged by performing elaborate quantum mechanical computations in the form of density functional theory (DFT) calculations. This is done while the training data for the ASLA agent is generated. The Q-values displayed on this website thus represent what the ASLA agent learned during its training process. Extracting of the order 1000 Q-values from an ASLA neural network takes of the order ms (milli seconds) while completing just a single DFT calculation during the training took of the order 10s of seconds. The ASLA agent thus represents a highly computational cost efficient way of handling quantum mechical information. Since the ASLA agent further attempts do predict the stability of not just the given structure, but the final structure once all atoms have been placed, the ASLA agent both copes with solving quantum mechanics and with building chemical intuition. When interacting with an ASLA agent, you should keep in mind that it is nothing but a large set of numbers being multiplied and added, yet it appears to have gained some artificial intelligence.

A database of highly stable molecular compounds was built using the GOFEE algorithm as implemented in the AGOX package. These molecular compounds were used as training seed either for supervised or reinforcement learning training of the ASLA agent. In supervised training, some atoms of the seed structures are displaced randomly and the energy of the structure is recalculated. In reinforcement learning mode, some atoms are removed from the seeds and placed again according to an epsilon-greedy policy based on the Q-value prediction of the ASLA agent. Up to 10000 training steps were performed (1/3 supervised, 2/3 reinforcement mode).

The neural networks used have the following dimensions: 3 hidden layers, 10 filters of 15x15 pixels. The total number of trainable parameters in the agents is around 60000.

Similarity measures are evaluated by comparing the eigenvalue spectrum of the connectivity matrices for the molecules. As such spectra are not unique, the similarities reported by the web site may sometime appear counter-intuitive.

About

This website was constructed by the following team:

- 2022: Mads-Peter Verner Christiansen and Bjørk Hammer.

- 2019: Søren Ager Meldgaard, Henrik Lund Mortensen, Kabwe Sango, and Bjørk Hammer

You find more information on our recent research projects here.

Sponsors